For only the legacy BGP Legacy Multiple Queues execution mechanism, in addition to integration endpoint transactional settings (refer to the Integration Endpoint Transactional Settings topic in the Data Exchange documentation), parallel and multithreading properties can be used to manage efficient processing.

Note: The recommended 'One Queue' priority based BGP execution mechanism does not require parallel and multithreading properties since the waiting BGPs are prioritized for execution based on the priority of the BGP and the created time. Refer to the BGP One Queue topic.

Configuration properties allow admin users to manage system behavior for a wide range of functionality, for example, the management of background processes and web services.

Note: Ensure changes in the settings are applied with care and properly tested before promoting to production. Parallel setting and multithreading can easily result in optimistic locking issues, which can have a negative impact on performance, rather than the desired performance gain.

For SaaS systems, properties are set within the Self-Service UI by going to the Configuration Properties tab for your system. If the properties you need are not shown, submit an issue within the Stibo Systems Service Portal to complete the configuration. For on-prem systems, properties shown in this topic should be added to the sharedconfig.properties (or config.properties, if only using a single server) file.

Size: The number of BGPs that can run at the same time in the same queue. As an example, for data profiling setting the size = 0 on one server and setting size = 1 on another server forces all data profiling to be handled by a specific server. Recommended practice is to use one server to handle background processes and another to handle ad hoc user requests, which can give users improved system responsiveness.

-

Parallel: The following BGP types support the parallel option, which makes the same BGP use multiple CPUs on the server (multithreading) to finish faster:

-

Data Profiling

-

Experian Email Validation

-

List Processing

-

Match Code Generation

-

Matching and Linking

-

Match and Merge Import

-

Match and Merge Match Tuning

-

Policy Evaluation

-

Use the BackgroundProcess.Queue.[name of queue].Parallel=[number of CPUs] property where the [name of queue] designation is replaced with the name of the queue for which the setting applies and the [number of CPUs] designation determines how many CPUs are used.

A queue parallel of 1 means only one CPU is used for the BGP, while a queue parallel of 2 means two CPUs are used for the BGP.

Cores are the CPUs available to handle parallel processing. The CPU setting must not exceed the number of available cores. To view the number of cores on the application server, refer to the 'No of cores' parameter in the Activity tab 'Details' section of the Admin Portal, as defined in the Activity topic in the Administration Portal documentation.

Note: The parallel queue property's underlying implementation varies significantly based on the background process type. When starting 'X' number of jobs, you use at least 'X' times as many resources, which come from other processes and could impact performance. As such, thorough performance testing with production-level hardware and peak traffic conditions is highly recommended.

- ProcessType: This property is used to rename the default queue for a specific type of background process which allows the administrator to control the processes that run on each server.

Use the BackgroundProcess.ProcessType.[process type ID].Queue=[name of queue] property where the [process type ID] and the [name of queue] designations are replaced with the actual type ID and the actual name of the queue for which the setting applies.

For steps to change your configuration, refer to the Modifying Configuration for Legacy BGP Queues topic.

Single Application Server and Single Queue Example

This example includes inbound integration endpoints (IIEPs) but can also be applied to any feature that uses background processes.

Consider the following config.properties settings for a single server called appserver1:

BackgroundProcess.Queue.DataProfilerParallel.Parallel=4 BackgroundProcess.Queue.DataProfilerParallel.Size=4 BackgroundProcess.Queue.In.Parallel=8 BackgroundProcess.Queue.In.Size=1

Combined with these workbench parameter settings:

- The match and merge IIEP has 'Queue for endpoint processes=IN'

- The bulk update IIEP has 'Queue for endpoint processes=IN'

Result

A configuration with these settings results in the following activity on the single server:

- A 'Match and Merge IIEP' background process will not run simultaneously with a 'Bulk Update' background process. Both use the IN queue, which has size 1, limiting it to only one (1) background process at a time.

- A 'Match and Merge IIEP' background process will use up to 8 cores due to the parallel setting of 8 for the IN queue.

Two Application Servers Example

This example includes inbound integration endpoints (IIEPs) but can also be applied to any feature that uses background processes.

In addition to appserver1 described above, add a second application server (called appserver2) with the following sharedconfig.properties settings:

BackgroundProcess.Queue.DataProfilerParallel.Size=0 BackgroundProcess.Queue.In.Size=0

Result

A configuration with these settings results in the following activity on the two servers:

- appserver1 runs up to four (4) data profiling processes in parallel. Data profiling process number five (5) remains in queue until one of the first four is complete.

- appserver2 never runs 'Match and Merge IIEP' background processes nor 'Bulk Update IIEP' background processes because this server has a size of 0 for IN queue, and both of these background processes use the IN queue.

- Since all BGPs run on appserver1, high BGP load does not adversely impact users because appserver2 is reserved for actions in the user interface.

Single Application Server and Two Queues Example

This example includes inbound integration endpoints (IIEPs) but can also be applied to any feature that uses background processes.

Consider the following config.properties settings for a single server called appserver3:

BackgroundProcess.Queue.InIIEP1.Size=10 BackgroundProcess.Queue.InIIEP2.Size=20

Combined with these workbench parameter settings:

- The match and merge IIEP has 'Queue for endpoint processes=InIIEP1' with 'Transactional settings = None'

- The bulk update IIEP has 'Queue for endpoint processes=InIIEP2' with 'Transactional settings = None'

Result

A configuration with these settings results in the following activity on the server:

- appserver3 runs up to 10 match and merge processes simultaneously. Match and merge process number 11 remains in queue until one of the first 10 is complete. These are the only processes that run on the InIIEP1 queue.

- appserver3 runs up to 20 bulk update background processes simultaneously. Bulk Update process number 21 remains in queue until one of the first 20 is complete. These are the only processes that run on the InIIEP2 queue.

Single OIEP in Parallel Example

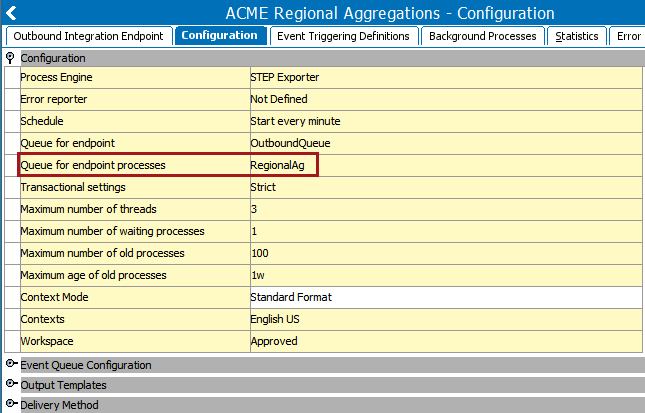

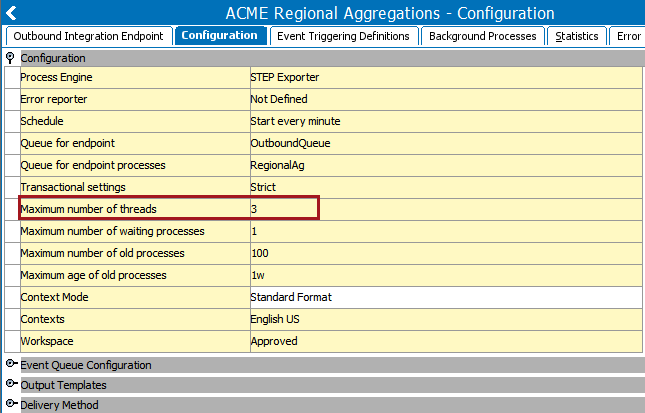

In the previous examples, the techniques involved configuring several BGPs (using a standard queue, for example, 'OUT') to use parallel processing and/or to change the number of processes that can run concurrently. In some situations, however, the requirement may be to configure a single OIEP to run in parallel to process the related data faster. In this case, define a dedicated queue specifically for the OIEP using the 'Queue for endpoint processes' parameter on the Configuration tab as shown in the image below.

Note: SaaS environments with STEP 11.1 or later with a Cassandra database cannot set up OIEPs in parallel if the 'One Queue' feature is enabled. 'One Queue' replaces the Queue for Endpoint and Queue for Endpoint Processes parameters with a single 'Priority' parameter. For more information, refer to the BGP One Queue topic.

For example, the Acme company has a requirement to generate a weekly report of its total sales. Acme has locations in both the eastern and western parts of the country; however, because there are fewer locations in the west, less data processing is required for the west. The bulk of the data processing is generated by the locations in the east.

For this setup, the STEP system has a total of 24 cores and the 'RegionalAg' dedicated queue is defined to aggregate the regional sales figures via the following OIEPs:

-

'OIEP-east' is an OIEP that only processes data from the eastern locations. It is configured to run in parallel and the 'Maximum number of threads' parameter sets the number of CPU cores to three (3) to handle the larger data load.

-

'OIEP-west' is an OIEP that only processes data from the western locations. The 'Maximum number of threads' parameter uses the default setting of one (1) CPU core since there is less data to process. This OIEP does not run in parallel since it only uses one (1) core.

Although this STEP system has a total of 24 cores, the entry for the config.properties or sharedconfig.properties file (depending on the specific setup) is:

BackgroundProcess.Queue.RegionalAg.Parallel=3

With this setting, the OIEP's defined queue (RegionalAg) can have up to a maximum of three (3) parallel threads. This allows an end user to adjust this queue to use 1 thread (default), 2, or 3, but any larger value is ignored, even though the system has a total of 24 cores.

Although this property is not strictly required to enable parallel threads for a given OIEP, it allows a STEP administrator to set sensible boundaries and maintain optimal performance of the overall system.

Important: The example above is for a system with 24 cores, but some systems have fewer cores. Consider the impact that running in parallel will have on your system. Generally, the number of threads should not exceed a maximum of 25 - 30 percent of the total number of cores on the system. This allows both the STEP application and the underlying operating system to have the resources to function properly.

For example, if a system has a total of 4 cores, it is not recommended to define 3 threads for a queue as this would most likely result in degraded performance of the overall system since 75 percent of the resources are being consumed by a single OIEP.

To ensure that a production (or production-like) environment is not negatively impacted by a specific parallel setup, it is always recommended to first test the setup on a development or sandbox environment.

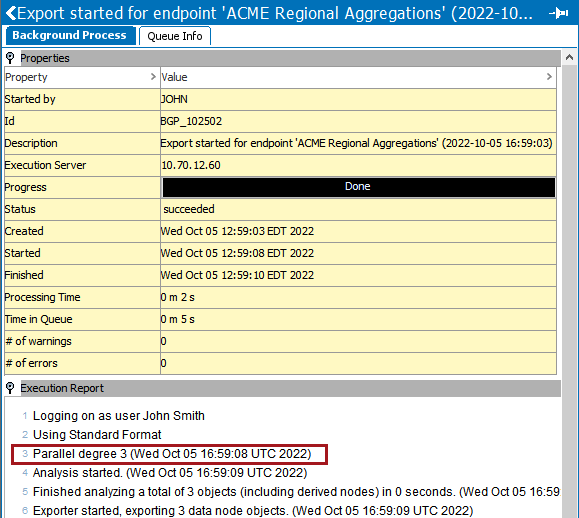

Once an OIEP is configured to run in parallel, the Execution Report (as shown below) contains an additional line item to indicate that the process is running in parallel.

Result

A configuration with these settings results in the following activity on the server:

-

The 'OIEP-east' OIEP runs one (1) BGP for the RegionalAg queue but runs in parallel using three (3) cores to process the data faster.

-

The 'OIEP-west' OIEP runs one (1) BGP for the RegionalAg queue and uses only one (1) core to process data in the same manner as a standard (default) BGP. This OIEP does not run in parallel since it only uses one (1) core.

Understanding config.properties Settings in On-prem Systems

Configuration properties provide on-premises system administrators with options for controlling system behavior, logging, processing, storage of information on the application server(s), and many other things. Configuration properties are specified in the config.properties or sharedconfig.properties files on the application server(s). There is one config.properties file per application server so a clustered environment may have multiple config.properties files, with each containing settings local to a particular application server.

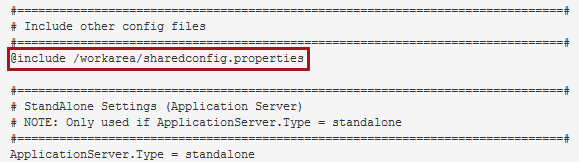

Conversely, the sharedconfig.properties file is global so there will be only one file per setup, with all settings being applicable to all application servers. Most properties are set in the sharedconfig.properties file, with the config.properties file being used only for those properties that expressly require it.

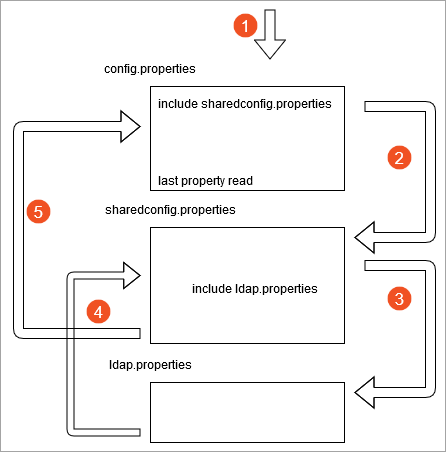

The config.properties file is read first, line-by-line, until the first 'include' statement—typically the sharedconfig.properties file as shown below.

When an 'include' statement is found, the included file is read line-by-line (and any 'include' statements in those files are also read) before reading is continued in the config.properties file. A property can exist more than once in the properties files and the last value read is the only active value. This allows properties in the config.properties that come after an 'include' statement to overwrite the same properties in the sharedconfig.properties file.

For more information on config.properties settings, refer to the Configuration topic in the Administration Portal documentation.

Use the BackgroundProcess.Queue.[name of queue].Size=[number of allowed concurrent processes] property where the [name of queue] designation is replaced with the name of the queue for which the setting applies and the [number of allowed concurrent processes] designation determines how many concurrent background processes can run.

A queue size of 1 means only one process can run at a time, while a queue size of 2 means two processes can run concurrently.

For more information, refer to the Default Configuration for Legacy BGP Queues topic, and the Monitoring an IIEP via Background Process topic or the Monitoring an OIEP via Background Process topic in the Data Exchange documentation.