There are a number of considerations before starting the setup for Kafka Delivery.

-

Capability

-

Delivery Method Options

-

Topic Partitions

-

Handling for dangling references

-

SASL authentication, if needed

Each area is explained in further detail below.

When configuring Kafka, STEPXML is recommended for the messaging format. Other formats supported for integration with STEP will also work, but the documentation has been written for STEPXML.

Compatibility

Integration with Kafka for event messaging is supported via the following versions of Apache Kafka:

-

3.5.1

-

3.4x

-

3.2x

-

3.0x

-

2.6x

-

2.5x

-

2.4x

For information on receiver options using Kafka, refer to the Kafka Streaming Receiver or the Kafka Receiver topics.

Delivery Method Options

The following options are available for Kafka delivery:

Note: Option 1 is the recommended and preferred method.

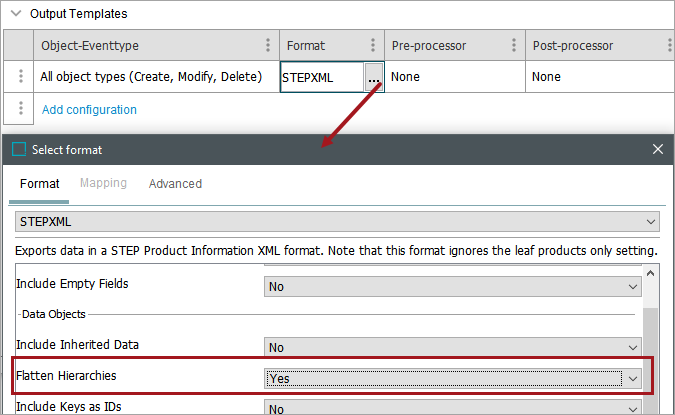

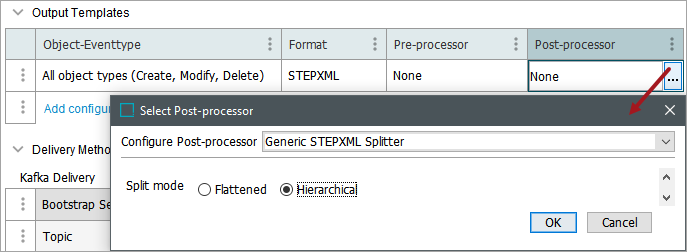

Option 1 — OIEP with the STEPXML format using inherited values

-

On the endpoint, in the Format parameter, select STEPXML and set the 'Flatten Hierarchies' parameter to 'Yes.'

-

For the Post-processor parameter, select Generic STEPXML Splitter and set the 'Split mode' to Hierarchical. The Generic STEPXML Splitter divides STEPXML messages into multiple STEPXML valid fragments containing one single node per STEPXML file.

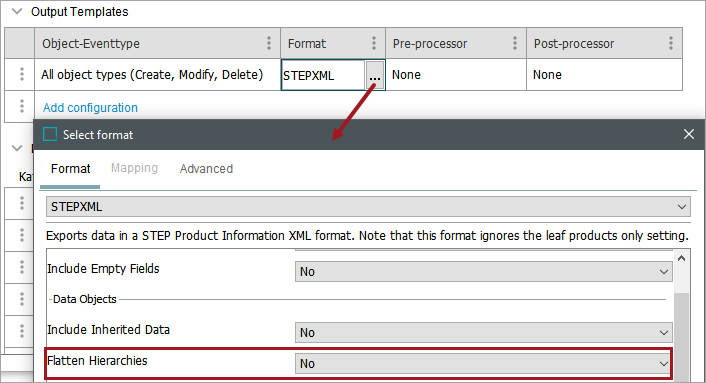

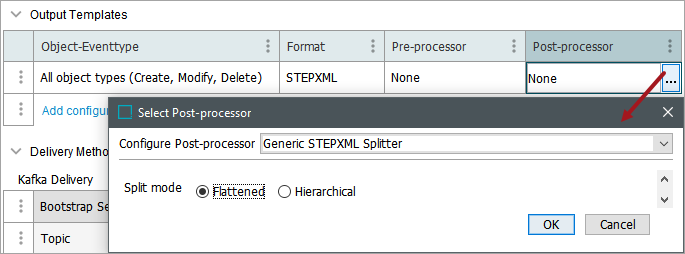

Option 2 — Split STEPXML messages to events for a single node per message and reduce the event XML size

-

On the endpoint, in the Format parameter, select STEPXML and set the 'Flatten Hierarchies' parameter to 'No.' It is not needed for this, as it will be flattened in the post-processor.

-

On the endpoint, select the Generic STEPXML Splitter post-processor. The Generic STEPXML Splitter divides STEPXML messages into multiple STEPXML valid fragments containing one single node per STEPXML file.

-

Use the Flattened option to further reduce the size of the individual event messages. Flattened denormalizes any child hierarchies in STEPXML to be written out as a single root hierarchy.

Note: If using inherited values in the export, it is recommended to flatten the values in STEPXML format as demonstrated in Option 1 above.

For more information on post-processors, refer to the Configure the Pre-processor and Post-processor section of the following topics:

Option 3 — Configure compression of the event content

LZ4 is the supported compression format. If you configure compression to be used on the integration endpoint when integrating with custom developed Kafka, any producers or consumers must be able to handle compression / decompression of LZ4 event content.

Kafka Producer compression can also be enabled on the OIEP by defining Kafka-specific compression.type property on ExtraDriverOptions, as defined in the Kafka Delivery Method topic and on the web.

Topic Partitions

OIEPs using the Kafka Delivery Method can deliver to multiple partition topics.

By default, the key for all messages published from STEP is the ID of the OIEP responsible. This behavior can be changed by removing the template macro, so no key is produced, or entering a new value for the 'Message Metadata Source' Template field when 'Template' is selected in the Kafka Delivery Method configuration. When no key exists, Kafka distributes messages evenly between partitions.

The following variables can be used to produce message keys:

-

$endpointId - ID of the OIEP publishing the message.

-

$nodeType - The type of node being published (e.g., product, classification, etc).

-

$nodeId - ID of the STEP node being published.

Note: Because STEP IDs are not unique, $nodeType can be combined with $nodeId to create a unique identifier.

If additional metadata sources are required but are not covered by the Template option, use the ‘Function’ option to parse the output message, set the message key, and set the message headers for the first MB of the message.

For more information, refer to the Kafka Delivery Method topic.

Handling for Dangling References

The Kafka connector and the Generic STEPXML Splitter occasionally have known issues when processing references where the referred to node has not yet been created in the target system. This can create 'dangling references.' For more information, refer to the Dangling References in STEPXML topic.

SASL Authentication

Support for Simple Authentication and Security Layer (SASL) authentication (both SASL PLAINTEXT with PLAIN and SASL_SSL with PLAIN, OAUTHBEARER, and SCRAM) is supported for the Kafka receiver and delivery options. Using SASL gives you more data security options and allows for alternatives to the other array of Kafka connector authentication functionality support, which includes support for AWS MSK, Heroku, and Aiven (with TLS Client Certificate Authentication).

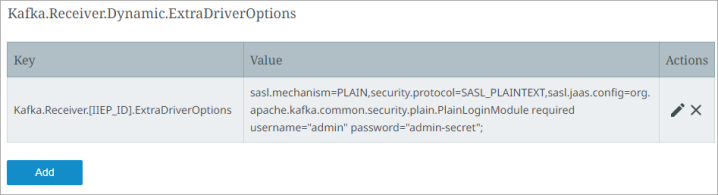

Use the Self-Service UI to edit the Kafka.Receiver.Dynamic.ExtraDriverOptions configuration property, where the 'Dynamic' is the placeholder for your endpoint ID [IIEP_ID].

Important: Prior to configuration, dropdown parameters that rely on a property are empty. Hovering over the dropdown or clicking a dropdown displays the required property name to configure. To display the value(s), in the Self-Service UI, select the environment, and on the 'Configuration properties' tab, configure the property for your system. Refer to the Self-Service User Guide for information about setting configuration properties, including the use of the ${CUSTOMER_SECRETS_ROOT} and ${CUSTOMER_CONFIG_ROOT} variables.

Allow a few minutes for changes made in the Self-Service UI 'Configuration properties' tab to display in the workbench.

For SaaS systems, properties are set within the Self-Service UI by going to the Configuration Properties tab for your environment. Some changes may require you to restart the server and/or user interface (i.e., the workbench) before they take effect. If the properties you need are not shown, submit an issue within the Stibo Systems Service Portal to complete the configuration.

Below is an example configuration for PLAIN username/password authentication in a SaaS environment.

Configure the value of the ExtraDriverOptions as shown in the example below (you can copy this format):

-

Key field:

This should indicate the IIEP ID and must be entered as:

Kafka.Receiver.[IIEP_ID].ExtraDriverOptions (Replace [IIEP_ID] with the STEP ID of your endpoint.)

-

Value field:

Enter the following string:

sasl.mechanism=PLAIN,security.protocol=SASL_PLAINTEXT,sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="admin-secret";

For SASL_SSL with PLAIN username / password authentication, the Keystore configuration in the SSL part of the Kafka receiver or delivery option can be omitted. If there is no requirement that the Kafka server has to trust the Stibo Systems SSL certificate, then none is needed. A Truststore Location / Password is required to indicate that your system trusts the Kafka Servers Certificate.

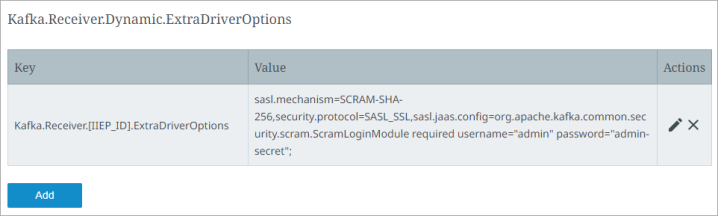

Below is a sample config for SCRAM authentication in a SaaS environment:

-

Key field:

This should indicate the IIEP ID and must be entered as:

Kafka.Receiver.[IIEP_ID].ExtraDriverOptions (Replace [IIEP_ID] with the STEP ID of your endpoint.)

-

Value Field:

Enter the following string:

sasl.mechanism=SCRAM-SHA-256,security.protocol=SASL_SSL,sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="admin" password="admin-secret";

All of the configuration options are taken from confluent.io documentation on how to configure SASL authentication found in this link: https://docs.confluent.io/platform/current/kafka/overview-authentication-methods.html.