To increase performance when exporting from an event-based outbound integration endpoint (OIEP) you can increase the number of threads for a given OIEP. Common setup is to use multithreading when there is a large amount of data going to a downstream system on a regular basis, and when the downstream system is capable of handling it.

Multithreading uses more than one thread within a single BGP, for example, in an export, multiple processes do the work of exporting data while the BGP produces one file / message more quickly than a BGP using a single thread.

Multithreading is different from parallel processing, where BGP runs on a queue with a size greater than 1 allows for more than one BGP to run at the same time. For example, multiple import processes working on different files / messages or multiple exports that produce different files / messages complete the backlog more quickly than a queue with a size of 1.

Note: Managing the queue size is not applicable when using the 'One Queue' BGP Scheduler option (defined in the BGP One Queue topic of the System Setup documentation) since the system manages the number of BGPs running concurrently based on overall load on the server.

Consider the following points before increasing the number of threads to more than one:

-

The STEP system hardware should have enough resources to perform with multithreading. Enabling multithreading provides no benefit if the environment is already being fully utilized.

-

While multithreading can improve STEP performance, if that results in overloading a downstream (receiving) system, the improvement may not be generally advantageous.

-

Before implementing in your production system, determine the best performance gain by running the settings in a similarly-sized non-production environment with similar overall load. Start with a small number of threads and a small amount of data and increase each to determine the best performance gain.

Multithreading Setup

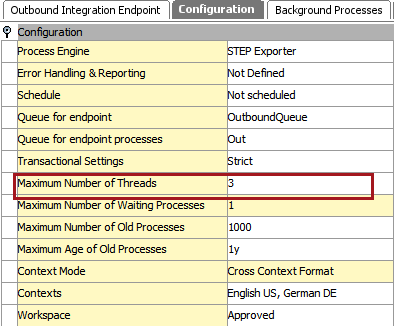

Although the Number of threads setting is available on the Configuration tab for both static and event-based OIEPs, the setting only affects event-based processes.

The default thread setting is one (1), in which case the endpoint produces a single message at a time, with all events in the batch processed serially. Increasing the thread number results in each batch size being divided by the thread number so that the contents of a batch can be processed in parallel.

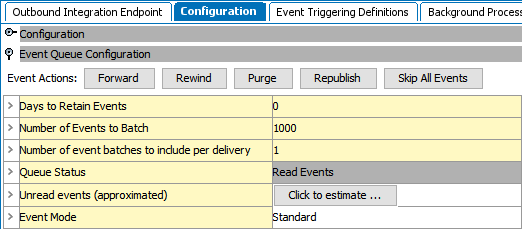

Since multiple event batches per message are not supported for multiple threads, when the 'Number of threads' is greater than one (1), then the 'Number of event batches to include per delivery' (shown in the image below) is automatically set to one (1). The following warning is included in the BGP execution report and also in the application server log: Number of threads are [x]. Number of batches per delivery will be set to 1 for integration endpoint [ID] as this is the only allowed value when multiple threads are used. Requested number of batches were [y].

When all threads are complete, the batch yields a single message, as is consistent with the 'Strict' transactional setting required by event-based OIEPs. Thread size is typically increased for particularly critical information where the speed with which the message is produced is key. However, increasing the setting beyond the capabilities of the hardware will impede the overall performance of the application server, so care must be taken in adjusting the thread size.

Increasing the Batch Size

When increasing the number of threads running, the size of the batch will decrease proportionally (e.g., a batch size of 100 using two threads will be split into two batches of 50). When adjusting the number of threads, it is therefore worthwhile to experiment with increasing the batch size, in order for each working thread to have a reasonable amount of data to process.

Serialized and Parallelized

The batch-fetching of the events is run serially and the data distributed as evenly as possible to each thread. From fetching the batch on through the delivery stage, the pre-processing, main processing, and post-processing take place in parallel. Following post-processing (if applicable), the data are handed over to the delivery stage which is again run serially. Events are therefore delivered as if everything was executed serially. With this in mind, it is important to consider the schedule of the endpoint and ensure that it is set to check for events as frequently as if the endpoint were single-threaded.

When a batch is processed in parallel (via multiple threads), it is recorded in the execution report of the endpoint.