There are a number of considerations before starting the setup for Kafka Streaming Receiver.

-

Capability

-

Message size limits

-

Topic partitions

-

Topic offset

-

Dynamic membership

-

SASL authentication, if needed

Each area is explained in further detail below.

When configuring Kafka, STEPXML is recommended for the messaging format. Other formats supported for integration with STEP will also work, but the documentation has been written for STEPXML.

Compatibility

Integration with Kafka for event messaging is supported via the following versions of Apache Kafka:

-

3.5.1

-

3.4x

-

3.2x

-

3.0x

-

2.6x

-

2.5x

-

2.4x

For information on an alternate receiver option using Kafka, refer to the Kafka Receiver topic.

For information on the delivery option using Kafka, refer to the Kafka Delivery Method topic.

Message Size Limits

When using the Kafka Streaming Receiver, structure messages to include one object per file, or a small number of related objects that will consistently be smaller than the 1 MB (1048576 bytes) limit. The Kafka Streaming Receiver is designed to efficiently handle high volumes of small messages by quickly importing messages without the overhead of individual background processes for every message. Larger messages are considered inefficient and an anti-pattern in Kafka and are therefore not supported by the Kafka Streaming Receiver.

When you cannot avoid files larger than the 1 MB limit for an integration, consider using the REST Receiver instead of Kafka.

If messages are close to the limit or there are occasional large messages, use compression to reduce the size of the messages. As this can impact the performance of the system, it is only recommended when there are occasional larger messages.

Topic Partitions

The Kafka Streaming Receiver can read from multiple partitions on a topic. Up to 10 consumers can run on the STEP side. This means on a system with 20 partitions, each consumer will get data from two partitions. IIEPs reading from topics with a higher number of partitions will have a greater priority over other IIEPs with fewer or only one partition.

Topic Offset

The Kafka Streaming Receiver commits the offset when a message is imported and there is no option to manage the offset directly in STEP. Each IIEP maintains its own Consumer Group ID which is calculated based on the System Name and the IIEP ID to ensure unique consumers manage their own offsets.

Dynamic Membership

The Kafka Streaming Receiver uses dynamic membership and does not include an option for scheduling. Without a schedule, the concept of invoking the endpoint does not exist. When an IIEP using the Kafka Streaming Receiver is enabled and all validation of topics (primary and error topic, if configured), message size limitations, authentication, and broker connectivity are completed successfully, one thread is started for each partition on the topic, up to the limit of 10. Connectivity is maintained until interrupted by error, or when there is any change to the configuration of the IIEP, or the IIEP is disabled in the workbench or via REST.

SASL Authentication

Support for Simple Authentication and Security Layer (SASL) authentication (both SASL PLAINTEXT with PLAIN and SASL_SSL with PLAIN, OAUTHBEARER, and SCRAM) is supported for the Kafka receiver and delivery options. Using SASL gives you more data security options and allows for alternatives to the other array of Kafka connector authentication functionality support, which includes support for AWS MSK, Heroku, and Aiven (with TLS Client Certificate Authentication).

The Kafka Streaming Receiver solution has been tested with SASL using JAAS (SASL_PLAINTEXT) and mTLS.

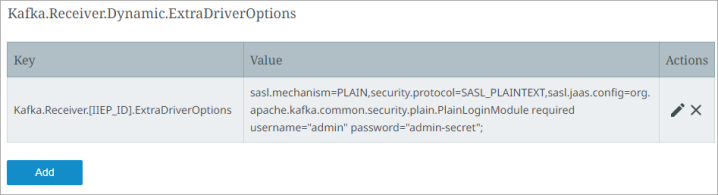

Use the Self-Service UI to edit the Kafka.Receiver.Dynamic.ExtraDriverOptions configuration property, where the 'Dynamic' is the placeholder for your endpoint ID [IIEP_ID].

Important: Prior to configuration, dropdown parameters that rely on a property are empty. Hovering over the dropdown or clicking a dropdown displays the required property name to configure. To display the value(s), in the Self-Service UI, select the environment, and on the 'Configuration properties' tab, configure the property for your system. Refer to the Self-Service User Guide for information about setting configuration properties, including the use of the ${CUSTOMER_SECRETS_ROOT} and ${CUSTOMER_CONFIG_ROOT} variables.

Allow a few minutes for changes made in the Self-Service UI 'Configuration properties' tab to display in the workbench.

For SaaS systems, properties are set within the Self-Service UI by going to the Configuration Properties tab for your environment. Some changes may require you to restart the server and/or user interface (i.e., the workbench) before they take effect. If the properties you need are not shown, submit an issue within the Stibo Systems Service Portal to complete the configuration.

Below is an example configuration for PLAIN username/password authentication in a SaaS environment.

Configure the value of the ExtraDriverOptions as shown in the example below (you can copy this format):

-

Key field:

This should indicate the IIEP ID and must be entered as:

Kafka.Receiver.[IIEP_ID].ExtraDriverOptions (Replace [IIEP_ID] with the STEP ID of your endpoint.)

-

Value field:

Enter the following string:

sasl.mechanism=PLAIN,security.protocol=SASL_PLAINTEXT,sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="admin-secret";

For SASL_SSL with PLAIN username / password authentication, the Keystore configuration in the SSL part of the Kafka receiver or delivery option can be omitted. If there is no requirement that the Kafka server has to trust the Stibo Systems SSL certificate, then none is needed. A Truststore Location / Password is required to indicate that your system trusts the Kafka Servers Certificate.

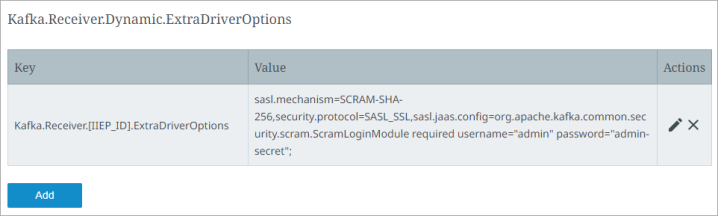

Below is a sample config for SCRAM authentication in a SaaS environment:

-

Key field:

This should indicate the IIEP ID and must be entered as:

Kafka.Receiver.[IIEP_ID].ExtraDriverOptions (Replace [IIEP_ID] with the STEP ID of your endpoint.)

-

Value Field:

Enter the following string:

sasl.mechanism=SCRAM-SHA-256,security.protocol=SASL_SSL,sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="admin" password="admin-secret";

All of the configuration options are taken from confluent.io documentation on how to configure SASL authentication found in this link: https://docs.confluent.io/platform/current/kafka/overview-authentication-methods.html.